Introduction

Setting up NVMe RAID storage on a Raspberry Pi 5 might sound like overkill, but if you’ve got two drives sitting around and a need for redundancy, it’s a solid weekend project. The Raspberry Pi 5 now supports PCIe out of the box. That means you can boot from NVMe, run dual SSDs, and even set up RAID1 mirroring with mdadm, without needing an extra controller card.

This guide covers what hardware you need, how to wire it up, and how to configure a mirrored RAID array for data redundancy. You’ll also learn how to boot directly from NVMe and keep your setup running cool, quiet, and recoverable if a drive fails.

Key Takeaways

- Raspberry Pi 5 supports booting from NVMe and can run RAID1 with software tools like mdadm.

- A dual-slot NVMe adapter, proper power, and active cooling are required for stable performance.

- RAID1 mirrors your data but is not a backup solution. Use off-site or external backups as well.

- Boot from NVMe RAID is possible, though the /boot partition usually stays on the SD card.

- Monitoring tools like smartmontools and periodic RAID checks help ensure long-term health.

- Simplicity beats complexity. Only use advanced RAID setups if you really need them.

Hardware Checklist

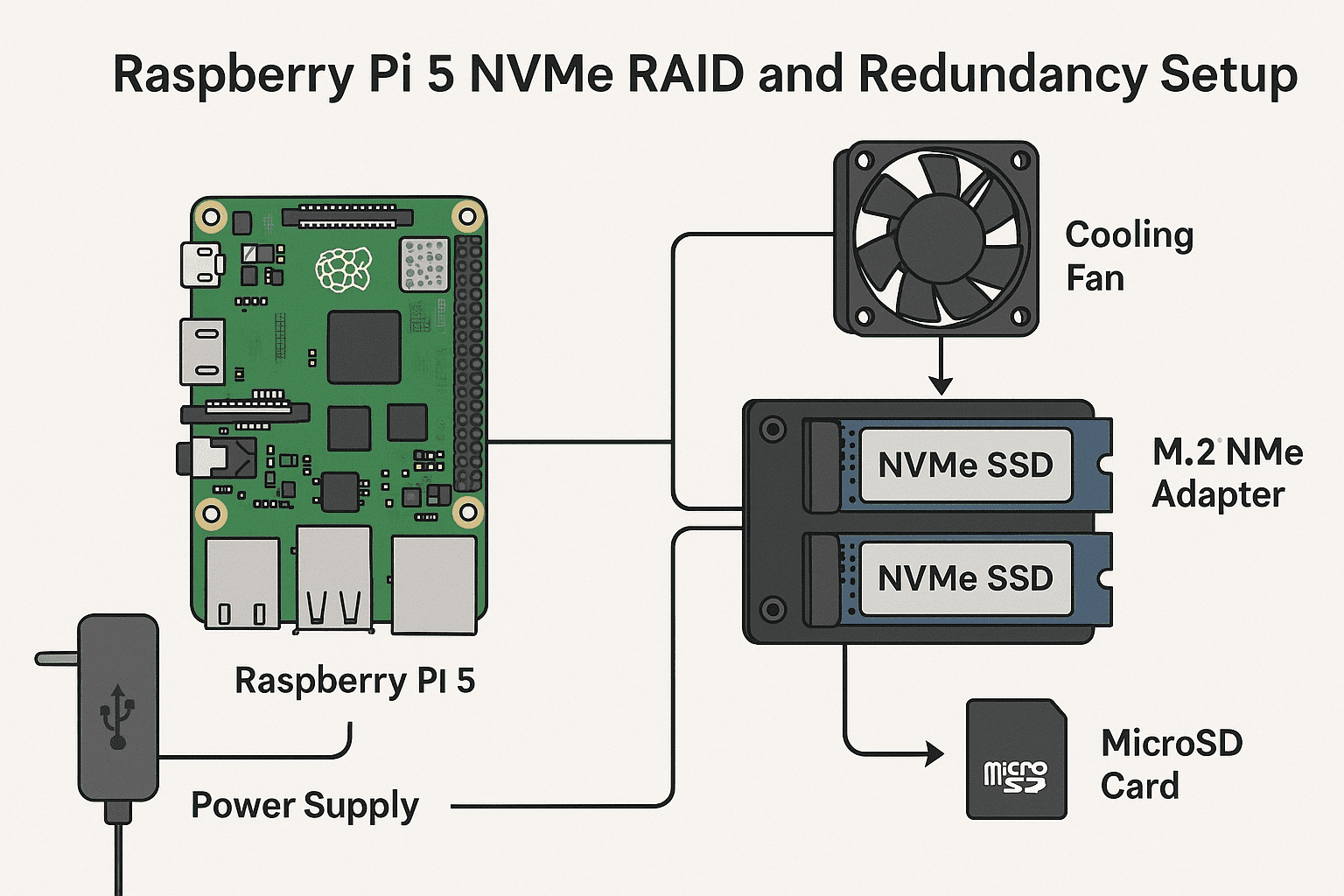

Raspberry Pi 5

This is the main board. Make sure you’re running the latest firmware so it supports booting from PCIe-connected devices.

M.2 NVMe Adapter

You’ll need a dual-slot M.2 adapter compatible with the Raspberry Pi 5’s PCIe lane. Popular options include the M.2 HAT+ or Geekworm X1004.

Two NVMe SSDs

Stick with models under 2 TB and look for lower power draw if you’re not using an external power board. 2280-size SSDs are the standard fit.

Power Supply

Use a 5V 5A USB-C power supply. Avoid bargain adapters because they often don’t deliver steady current. A stable power source keeps your drives and the Pi running reliably.

Cooling Setup

Get a fan or active cooling case. SSDs heat up quickly under RAID writes, and the Pi’s CPU won’t enjoy the extra warmth either.

MicroSD Card

Needed for the initial OS installation. You can later switch the root filesystem over to the RAID array.

Cables and Screws

Most NVMe adapters include standoffs and screws, but it’s worth checking your kit first. You’ll also want a high-quality USB-C cable and decent airflow around the board.

Optional Case

Not required, but it helps with organization and makes it easier to mount or stack if you’re going for a clean or permanent build.

Initial Setup

Flash Raspberry Pi OS

Use Raspberry Pi Imager or Balena Etcher to flash the latest Raspberry Pi OS to your microSD card. Go with the 64-bit version. Lite or Desktop both work, depending on whether you need a GUI.

Boot and Update Firmware

Insert the SD card, connect your power supply, keyboard, and monitor, and boot up. Open a terminal and run sudo apt update && sudo apt full-upgrade. Then run sudo rpi-eeprom-update and reboot to make sure your Pi is using the latest bootloader.

Enable PCIe Support

Open the boot config: sudo nano /boot/firmware/config.txt. Add this line at the bottom:

dtparam=pciex1Save and reboot. This tells the Pi to enable its PCIe lane on the GPIO header.

Verify SSD Detection

After reboot, open a terminal and run:

lsblkYou should see two NVMe devices listed (e.g., /dev/nvme0n1 and /dev/nvme1n1). If not, check your adapter and connections. You can also run:

sudo nvme listto get more detailed information about connected SSDs.

Partition and Format NVMe Drives

Identify Each Drive

Start by running:

lsblkLook at the listed NVMe devices. You might see /dev/nvme0n1 and /dev/nvme1n1. Double-check the size to know which one is which.

Create Partitions

Use fdisk or parted to partition each drive. Here’s an example using fdisk:

sudo fdisk /dev/nvme0n1Press g to create a new GPT partition table, then n to make a new partition. Accept the defaults. Press w to write changes. Repeat for the second drive.

Format Each Drive

Format the partitions with ext4:

sudo mkfs.ext4 /dev/nvme0n1p1

sudo mkfs.ext4 /dev/nvme1n1p1You can use other filesystems like btrfs, but ext4 is simple and reliable for RAID1.

Label the Partitions (Optional)

If you want to label the drives:

sudo e2label /dev/nvme0n1p1 raid1-a

sudo e2label /dev/nvme1n1p1 raid1-bCheck the Result

Confirm with:

lsblk -o NAME,FSTYPE,LABEL,SIZE,MOUNTPOINTBoth partitions should be labeled and formatted.

Create a RAID1 Array with mdadm

Install mdadm

If it’s not already installed:

sudo apt install mdadmCreate the RAID1 Array

Use the two NVMe partitions to create a mirrored array:

sudo mdadm --create --verbose /dev/md0 --level=1 --raid-devices=2 /dev/nvme0n1p1 /dev/nvme1n1p1This builds a RAID1 device at /dev/md0.

Monitor the Sync Process

Check progress with:

cat /proc/mdstatIt can take a while, depending on drive size.

Create mdadm Config File

Once the array is synced, create the config so it auto-assembles on boot:

sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.confThen update initramfs:

sudo update-initramfs -uCreate Filesystem on the RAID Array

Format the array:

sudo mkfs.ext4 /dev/md0Or use btrfs if you prefer:

sudo mkfs.btrfs /dev/md0Mount It Temporarily

Make a mount point and test it:

sudo mkdir /mnt/raid

sudo mount /dev/md0 /mnt/raidCheck That It Works

List the mounted file system:

df -hLook for /dev/md0 and make sure it’s mounted to /mnt/raid.

Mounting and Boot Configuration

Get the UUID for the RAID Array

First, find the UUID so you can set it up in fstab and boot config:

sudo blkid /dev/md0Copy the long UUID string for use in the next steps.

Mount the RAID Automatically

Edit fstab to mount the RAID array at boot:

sudo nano /etc/fstabAdd this line at the bottom:

UUID=your-uuid-here /mnt/raid ext4 defaults,nofail,discard 0 0Replace your-uuid-here with the actual UUID from the previous step. Save and exit.

Move Root Filesystem to RAID (Optional Advanced Step)

If you want to boot entirely from the RAID array, clone the current root to it:

sudo rsync -aAXv /* /mnt/raid --exclude={"/mnt","/proc","/sys","/tmp","/dev","/run","/media","/lost+found"}Double-check all files copied correctly. This is optional and requires extra boot config.

Set RAID as Root in cmdline.txt

Find the UUID of /dev/md0 and edit your boot parameters:

sudo nano /boot/firmware/cmdline.txtReplace the root=PARTUUID=... line with:

root=UUID=your-uuid-here rootfstype=ext4Again, use the correct UUID from earlier.

Reboot and Confirm

Unmount the RAID, then reboot:

sudo umount /mnt/raid

sudo rebootAfter rebooting, run:

mount | grep /to verify that / is coming from /dev/md0.

Boot from RAID (NVMe)

Check Current Boot Source

After rebooting, verify that your Pi is actually booting from the NVMe RAID:

findmnt /The output should show /dev/md0 as the source for /.

Keep /boot on SD Card

Even when booting the root filesystem from NVMe, the Raspberry Pi still uses the SD card or USB for the /boot partition. Leave the microSD in place with the original /boot intact unless you migrate that separately.

Enable Boot Fallback (Optional)

If one SSD fails, you want the system to boot from the surviving mirror. This works by default with RAID1, but test it:

- Power down the Pi.

- Remove one SSD.

- Power up and see if it still boots.

- Replace the SSD and resync if needed.

Update Bootloader if Needed

If you haven’t updated EEPROM in a while, run:

sudo rpi-eeprom-updateand reboot. This makes sure NVMe boot support is active.

Test Clean Shutdown and Reboot

Run a few reboots to be sure the array mounts cleanly and doesn’t fall into degraded mode.

Monitor Boot Logs

Use:

dmesg | grep md0to check for errors or warnings related to your RAID volume during startup.

Add Redundancy & Failover

Verify RAID1 Redundancy

RAID1 mirrors data across two drives. If one fails, the other keeps running. You can simulate this by pulling one SSD and rebooting. Check that the system still functions.

Set up Email Alerts

Install mailutils or another MTA to get email alerts:

sudo apt install mailutilsEdit /etc/mdadm/mdadm.conf and add your email:

MAILADDR your-email@example.comThen restart mdadm monitor:

sudo systemctl restart mdadmAdd Monitoring with smartmontools

Install and enable S.M.A.R.T. disk checks:

sudo apt install smartmontoolsEdit /etc/smartd.conf to monitor NVMe drives:

/dev/nvme0 -a -o on -S on -s (S/../.././02|L/../../6/03)

/dev/nvme1 -a -o on -S on -s (S/../.././02|L/../../6/03)Restart the daemon:

sudo systemctl restart smartdPlan for Drive Replacement

If a drive fails:

- Power down the Pi.

- Swap the dead SSD for a new one.

- Boot up and run:

sudo mdadm --add /dev/md0 /dev/nvmeXn1p1This starts resyncing the array.

Automate Periodic Checks

Create a cron job to log RAID status:

crontab -eAdd:

0 6 * * * cat /proc/mdstat >> /var/log/raid-check.logBackup Non-RAID Data Separately

RAID1 is not a backup. It protects against disk failure, not deletion or corruption. Use rsync, restic, or similar tools to copy important files to an external drive or remote server regularly.

Performance Tuning

Benchmark Your Drives

Use fio or dd to test read and write speeds. Example:

sudo apt install fio

fio --name=readtest --filename=/mnt/raid/testfile --size=500M --bs=4k --rw=read --ioengine=libaio --iodepth=16 --direct=1Adjust I/O Scheduler

Check the current scheduler:

cat /sys/block/nvme0n1/queue/schedulerYou can switch to none or mq-deadline:

echo none | sudo tee /sys/block/nvme0n1/queue/schedulerRepeat for other drives.

Enable TRIM Support

Edit fstab to include discard for ext4:

UUID=your-uuid-here /mnt/raid ext4 defaults,nofail,discard 0 0Alternatively, run fstrim manually:

sudo fstrim -v /mnt/raidMonitor Temperatures

Install nvme-cli:

sudo apt install nvme-cliThen run:

sudo nvme smart-log /dev/nvme0Watch for rising temps under load.

Add or Upgrade Cooling

If the SSDs or the Pi run hot during RAID writes, add a fan or use better thermal pads. Keep temps under 70°C to avoid throttling.

Avoid USB Power Limitations

Make sure your power supply is solid. If your adapter draws power through USB, you might see random disconnects under load. Use powered adapters if needed.

Test Under Load

Use stress-ng to simulate load and watch system behavior:

sudo apt install stress-ng

stress-ng --hdd 2 --timeout 60sLog I/O Activity

Install iotop to see which processes are hitting the disk:

sudo apt install iotop

sudo iotopKeep Firmware Updated

Run:

sudo rpi-eeprom-updateand make sure your NVMe SSDs have the latest firmware if the vendor provides a tool.

Use ext4 Mount Options Wisely

Add options like noatime or commit=60 for less write overhead:

UUID=your-uuid-here /mnt/raid ext4 defaults,nofail,discard,noatime,commit=60 0 0Maintenance and Health Checks

Automate SMART Reports

If you haven’t already, make sure smartmontools is installed. Set up weekly reports to log health data:

sudo smartctl -a /dev/nvme0n1 >> /var/log/smart_nvme0.log

sudo smartctl -a /dev/nvme1n1 >> /var/log/smart_nvme1.logAdd to a cron job for regular logging.

Run Weekly RAID Checks

To make sure the RAID stays in sync, create a cron job:

0 2 * * 0 /usr/share/mdadm/checkarray --cron --all >> /var/log/mdadm_check.logVerify RAID Health Manually

Run this to check for errors:

cat /proc/mdstatAlso use:

sudo mdadm --detail /dev/md0to get status details and check if it’s degraded.

Review System Logs for Warnings

Search logs for drive errors:

journalctl -p 3 -xbOr focus on mdadm:

grep mdadm /var/log/syslogUpdate System and Firmware

Regularly run:

sudo apt update && sudo apt upgrade

sudo rpi-eeprom-updateThis helps fix bugs that might impact performance or RAID behavior.

Backup Config Files

Copy your mdadm config:

sudo cp /etc/mdadm/mdadm.conf /mnt/raid/mdadm.conf.backupReplace Weak SSDs Proactively

If SMART data shows reallocated sectors or high temperature, consider swapping the SSD before it fails. Cheaper drives tend to degrade faster.

Test Rebuild Time Occasionally

You can remove a drive and re-add it to simulate a rebuild. This is useful to estimate how long recovery will take.

Check Disk Space Regularly

Use:

df -hKeep an eye on remaining space. RAID doesn’t protect you from running out of room.

Keep Notes on Changes

Maintain a changelog in /mnt/raid/RAID-notes.txt listing hardware changes, rebuilds, and firmware updates. This helps when something goes sideways later.

Optional: RAID with btrfs or ZFS

Why Use btrfs or ZFS Instead of mdadm?

Both btrfs and ZFS support built-in RAID functionality. They offer snapshots, checksums, and better data integrity tools. But they also need more RAM and configuration effort.

Install btrfs Tools

For btrfs:

sudo apt install btrfs-progsCreate btrfs RAID1 Volume

If you want to use btrfs:

sudo mkfs.btrfs -m raid1 -d raid1 /dev/nvme0n1p1 /dev/nvme1n1p1Then mount it:

sudo mount /dev/nvme0n1p1 /mnt/raidUse Subvolumes and Snapshots

Create subvolumes:

sudo btrfs subvolume create /mnt/raid/@rootTake snapshots:

sudo btrfs subvolume snapshot /mnt/raid/@root /mnt/raid/@root_snapshotInstall ZFS (If You’re Brave)

ZFS is not in the default Pi OS repo. You’ll need to switch to Debian or Ubuntu and run:

sudo apt install zfsutils-linuxCreate ZFS Mirror

Example:

sudo zpool create myzpool mirror /dev/nvme0n1p1 /dev/nvme1n1p1ZFS Pool Management

View pool status:

zpool statusReplace a failed disk:

zpool replace myzpool /dev/nvmeXn1p1Be Aware of RAM Limits

ZFS on Raspberry Pi works, but it’s heavy. You’ll want at least 4 GB RAM, and preferably some swap space.

Back Up More Often

File-level RAID has quirks. Use snapshots and external backups. Don’t rely on RAID alone.

Stick with mdadm if You Want Simplicity

If all this sounds like too much, mdadm is still solid. Keep it simple unless you need snapshots or advanced features.

Troubleshooting Common Issues

My NVMe drives don’t show up

Double-check PCIe is enabled in /boot/firmware/config.txt. Re-seat your adapter. Confirm your power supply can handle the load.

RAID array fails to assemble after reboot

Make sure /etc/mdadm/mdadm.conf contains the correct array definition and update-initramfs -u has been run.

Boot fails with ‘cannot find root’ error

Recheck the UUID in cmdline.txt. Make sure the root filesystem is actually on /dev/md0.

One drive keeps dropping from the array

Check if that SSD is overheating or underpowered. Use nvme smart-log and dmesg to investigate.

Use Cases for RAID on Raspberry Pi 5

- Reliable NAS: Use the array to serve network shares over SMB or NFS.

- Offsite backup node: Sync encrypted backups from another system.

- Log collector: Store system or app logs redundantly.

- Read-heavy kiosk: Great for kiosks or dashboards where data loss is unacceptable.

Estimated Setup Time and Skill Level

Skill Level: Intermediate

Total Time: 90–120 minutes depending on hardware familiarity

Join the Community

Want to see how others are using RAID on Raspberry Pi?

Join the r/raspberry_pi community for shared builds, troubleshooting, and inspiration.

Closing Tips

Use RAID1 for Peace of Mind, Not Perfection

RAID1 is great for surviving a single SSD failure, but it doesn’t protect against file deletion, corruption, or catastrophic power loss. Always pair it with backups.

Keep a Spare SSD Handy

Have a spare NVMe drive of the same size ready. When one drive fails, you won’t be scrambling for a replacement.

Test Your Setup Before Trusting It

Pull a drive and see if the system keeps running. Restore it and confirm rebuild works. These dry runs are more valuable than just assuming it will work.

Power and Cooling Are Not Optional

Flaky power and high temps will mess with your RAID array more than anything else. Use a real power supply and monitor thermals.

Watch Logs Like a Hawk

Errors usually show up quietly in logs before something major breaks. Set up alerts or check /var/log/syslog and dmesg often.

Know When RAID Isn’t Worth It

If your use case is light, a single NVMe SSD and nightly rsync to a backup drive might be easier and safer.

Document Your Setup

Keep a text file or printout of your RAID setup, UUIDs, partition scheme, and commands used. If the Pi fails or gets reformatted, you’ll thank yourself later.

Don’t Overcomplicate Things

RAID is useful, but simplicity wins. The fewer parts and layers, the fewer points of failure. Only use what you need.

Follow Official Sources

Always check Raspberry Pi’s official documentation and trusted developers like Jeff Geerling for hardware compatibility and firmware changes.

Practice Good Backup Habits

RAID is not a backup. Set up automated, remote, or external backups using rsync, restic, or your preferred tool. Test restores, too.

FAQ

Can I use different-sized NVMe drives in RAID1?

Technically, yes, but the array will match the size of the smaller drive and you lose symmetry. Stick with identical models if possible.

Can I boot Raspberry Pi 5 directly from NVMe RAID?

Yes, with the latest EEPROM and configuration. The root filesystem can live on RAID, but /boot typically remains on SD or USB.

Will RAID1 protect me from accidental file deletion?

No. It only mirrors physical data. Deleted or corrupted files are immediately synced to the second drive. Use real backups.

What happens if one SSD fails?

The system continues running on the surviving drive. Replace the failed one and mdadm will rebuild the array.

Is btrfs better than mdadm?

btrfs has features like snapshots and checksumming, but mdadm is simpler and more resource-efficient on low-power devices.

References

- Raspberry Pi M.2 HAT+ Documentation

- Jeff Geerling: NVMe RAID on Raspberry Pi 5

- PiDIYLab: NVMe Boot Guide

- 52Pi Blog: RAID on Raspberry Pi