Introduction

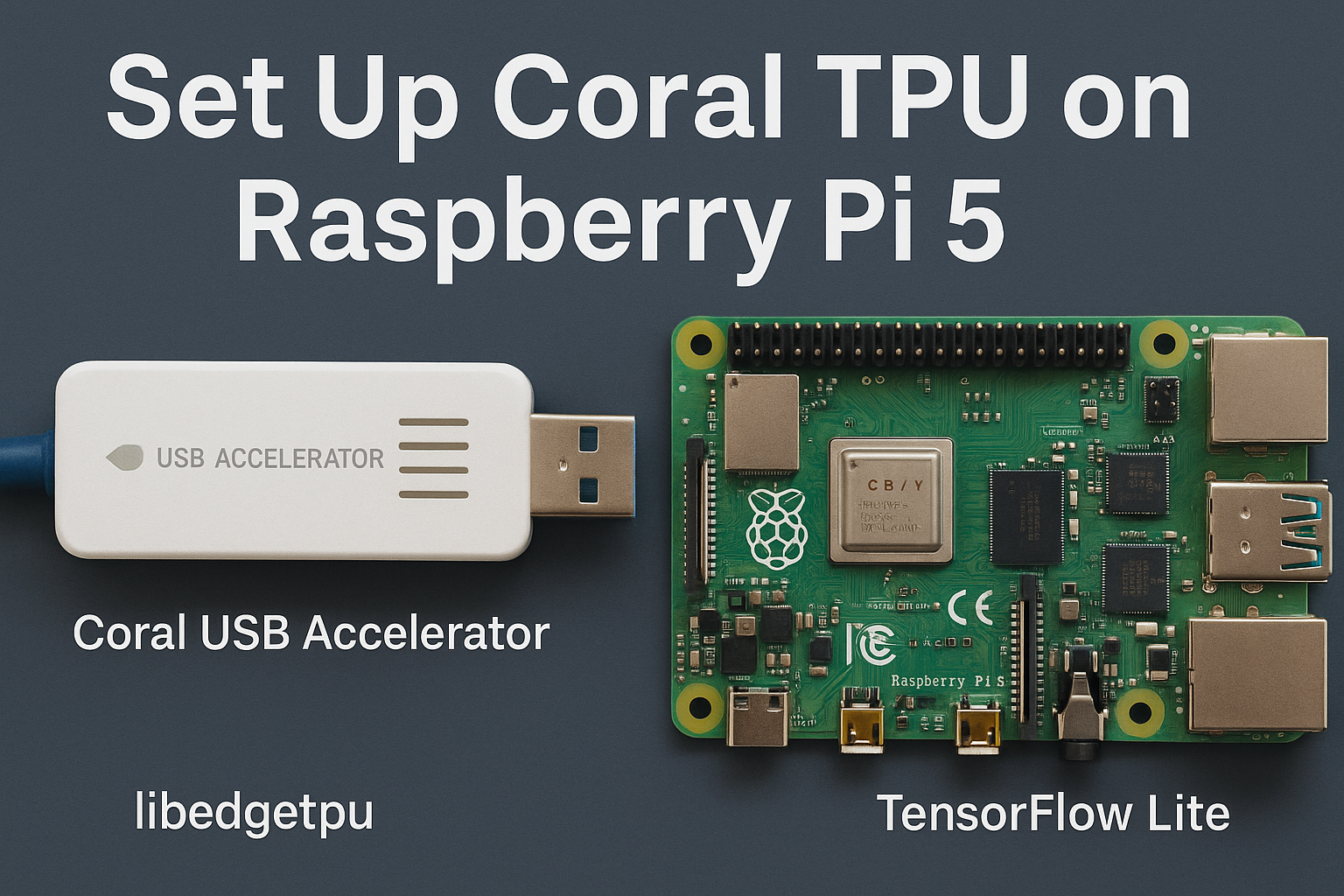

Setting up the Coral TPU on a Raspberry Pi 5 isn’t rocket science, but it sure feels like it when the USB port ghosts your hardware. The Coral USB Accelerator gives your Pi a serious speed boost for machine learning tasks, especially when you’re running TensorFlow Lite models like MobileNet. You don’t need a cluster of GPUs or a server farm in your basement. Just your Pi 5, a USB 3.0 port that actually works, and the libedgetpu runtime.

If you’re here, you’ve probably already tried plugging it in and wondering why nothing happens. Spoiler: it needs drivers, proper USB recognition, and a bit of terminal finesse. We’ll get you from zero to inference without rewriting your kernel.

Key Takeaways

- The Coral USB Accelerator works best with Raspberry Pi 5 and USB 3.0.

- You must install

libedgetpuandtflite-runtimemanually—no skipping steps. - Quantized TFLite models compiled for the Edge TPU are required.

- Cooling and power stability are essential for reliable performance.

- Real-time inference for vision tasks runs smoothly when everything’s set up correctly.

Prerequisites

Hardware Needed

Before you get too excited and plug things in randomly, here’s what you actually need on your desk:

- Raspberry Pi 5 – This model finally has the horsepower and proper USB 3.0 bandwidth to run the Edge TPU without choking.

- Coral USB Accelerator – Google’s little AI stick that acts like a mini turbocharger for TensorFlow Lite models.

- Power Supply (5V 3A USB-C) – The Pi 5 is not exactly a power-sipping device, especially with peripherals.

- MicroSD Card (32GB or higher) or USB SSD – You’ll want fast storage for logs, models, and packages.

- USB Keyboard & Mouse – Unless you’re SSH-ing in like a champ.

- Active Cooling (Fan or Heatsink) – Both the Pi and Coral TPU get warm under load.

Software Requirements

Don’t skip this part unless you enjoy troubleshooting at midnight:

Terminal Access (SSH or Direct) – You’ll need to run commands that aren’t available via the GUI.

Raspberry Pi OS Bookworm (64-bit) – It’s the most compatible right now, plus it ships with Python 3.11.

Python 3.11 or Higher – Most Coral dependencies align better with newer Python versions.

Internet Connection – For downloading packages, models, and updates.

Initial System Setup

Update and Upgrade the OS

Let’s be honest—if you haven’t run an update on your Pi since that one Reddit thread told you not to, now’s the time. Start with the basics:

sudo apt update && sudo apt full-upgrade -y

sudo reboot

After it reboots, check your kernel version:

uname -a

You’re looking for something like 6.1.x or newer, which supports the RP1 chip handling USB on the Pi 5.

Enable USB 3.0 Support

Now here’s the thing: not all ports on your Pi 5 are created equal. Plug the Coral USB Accelerator into a blue USB 3.0 port. If you’re using a USB hub, make sure it’s powered.

Check that your USB ports are actually functioning:

lsusb

You should see an entry like:

Bus 001 Device 004: ID 1a6e:089a Global Unichip Corp. Coral Edge TPU

If it's missing, your Pi either didn’t recognize it or you plugged it into a sleepy port. Try switching ports, using a powered hub, or re-checking the USB cable.

Installing Coral TPU Drivers

Install libedgetpu Runtime

The Coral TPU isn’t plug-and-play unless you count frustration as a feature. The drivers you need come from the official Coral repo. Google hasn’t baked them into Raspberry Pi OS, so here’s the manual route:

echo "deb https://packages.cloud.google.com/apt coral-edgetpu-stable main" | sudo tee /etc/apt/sources.list.d/coral-edgetpu.list

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

sudo apt update

sudo apt install libedgetpu1-std

What’s with libedgetpu1-std? That’s the standard runtime. There’s also libedgetpu1-max, which runs slightly faster but runs hotter. If you’re not slapping a fan on your Coral stick, go with std.

Set Up Udev Rules

Without udev rules, the TPU might be invisible to Python even if lsusb sees it. Make sure the rule file exists:

cat /etc/udev/rules.d/99-edgetpu-accelerator.rules

If it’s missing, reinstall:

sudo apt install --reinstall libedgetpu1-std

Then reload rules:

sudo udevadm control --reload-rules && sudo udevadm trigger

Install Python Bindings (TensorFlow Lite Runtime)

Next, you’ll need the Python library that talks to the Edge TPU. This is separate from full TensorFlow:

pip3 install tflite-runtime

Check it installed:

python3 -c "import tflite_runtime.interpreter"

No errors? Great. If you see something about missing modules or incompatible architecture, double-check your Python version or install using a wheel file specific to ARM64.

Verifying TPU Detection

Check Device Recognition

So, you’ve installed the drivers and plugged in the Coral USB Accelerator like a champ. But does your Pi actually know it’s there? Let’s confirm.

Run:

lsusb

Look for this in the output:

ID 1a6e:089a Global Unichip Corp. Coral Edge TPU

Still not there? Try rebooting the Pi or swapping USB ports. Make sure it’s plugged into a USB 3.0 port (the blue one), not the USB 2.0. The TPU doesn’t work well over low-power ports.

Next, check your system logs:

dmesg | grep -i usb

Look for lines mentioning Global Unichip or edgetpu. If you see errors like “device descriptor read/64, error -71”, it’s likely a power or cable quality issue.

Run a Test Inference

First, grab a test model and script from Coral’s GitHub or from an example package:

mkdir coral_test && cd coral_test

wget https://github.com/google-coral/test_data/raw/master/mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite

wget https://github.com/google-coral/pycoral/raw/master/examples/classify_image.py

wget https://coral.ai/images/parrot.jpg

Run the test script:

python3 classify_image.py \

--model mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite \

--input parrot.jpg

Expected output should show a class ID, label (bird type), and inference time.

If you get an error like “Failed to load delegate from libedgetpu.so”, then the driver didn’t install properly or permissions are off.

Running Performance Tests

Basic Inference Speed Checks

Let’s be honest—if you plugged in a TPU and it’s slower than your CPU, you’re gonna feel cheated. So, let’s test if it’s actually speeding things up.

Use Coral’s benchmark tool:

sudo apt install edgetpu-examples

Run the benchmark:

edgetpu_test

Or use a Python benchmark script with your .tflite model:

python3 -m tflite_runtime.examples.benchmark_model \

--model mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite \

--use_tpu

You’ll get output like:

Average Inference Time: 5.7 ms

Now try the same model without the TPU:

python3 -m tflite_runtime.examples.benchmark_model \

--model mobilenet_v2_1.0_224_inat_bird_quant.tflite

If you’re seeing numbers like 70ms without the TPU and 5ms with it, that means your TPU is working exactly how it should.

Stress Testing and Heat Monitoring

Want to see what happens when you throw some real load on it? Run this:

for i in {1..100}; do

python3 classify_image.py \

--model mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite \

--input parrot.jpg > /dev/null

done

Then check temps:

vcgencmd measure_temp

If your Pi or TPU starts roasting past 70°C, it’s time to slap a fan on that board. Some USB hubs also overheat under load, so watch out for power throttling.

Common Errors and Fixes

Driver Not Detected

Symptom: You run lsusb and there’s no sign of the Coral device.

Fixes:

- Try a different USB 3.0 port. Not all ports behave the same, especially on older or overloaded hubs.

- Use a powered USB hub. The Edge TPU draws more power than your Pi’s onboard USB may provide.

- Reinstall udev rules. Run:

sudo apt install --reinstall libedgetpu1-std

And don’t forget to reboot.

Runtime Crashes

Symptom: Python throws errors like Failed to load delegate from libedgetpu.so.

Fixes:

- Make sure you’re using a quantized

.tflitemodel compiled for the Edge TPU. - Your Python version might be unsupported by the installed

tflite_runtime. Try:

pip3 uninstall tflite-runtime

pip3 install tflite-runtime==2.10.0

- Reinstall the runtime or use a virtual environment.

USB Instability

Symptom: TPU shows up, then disappears, or inference randomly fails.

Fixes:

- Check for brownouts. Use

dmesg | grep voltage. - Use a better power supply. Cheap ones drop below 5V under load.

- Add cooling. High temps cause the Pi or TPU to throttle or disconnect.

Model Mismatch

Symptom: Inference fails with “Unsupported data type” or “Cannot allocate tensors”.

Fixes:

- Download Edge TPU-compiled models only. Regular

.tflitefiles won’t work. - Use Coral’s Edge TPU Compiler for custom models:

edgetpu_compiler your_model.tfliteOptimizing Performance

Model Quantization Tips

The Coral TPU only works with quantized models—that means the model’s weights are converted from floating point to integers (usually uint8), making them faster and more compact. If you’re using TensorFlow Lite, here’s how to prep a model:

- Train your model normally

- Use post-training quantization like this:

converter = tf.lite.TFLiteConverter.from_saved_model('your_model_path')

converter.optimizations = [tf.lite.Optimize.DEFAULT]

tflite_model = converter.convert()

- Compile it for the TPU:

edgetpu_compiler your_model.tflite

If you’re using a pre-built model, make sure the filename ends with _edgetpu.tflite. That’s a hint it’s already been compiled for TPU inference.

Power and Cooling Solutions

When inference starts lagging or crashing randomly, it’s often a power or heat issue—no fancy AI needed to detect that.

- Use a powered USB 3.0 hub – Especially if you’re running off SSDs or using multiple peripherals.

- Add a heatsink or fan – Both the Pi 5 and the Coral TPU generate heat quickly during continuous tasks.

- Monitor temps – Use:

vcgencmd measure_temp

- Use the standard runtime (

libedgetpu1-std) – The-maxversion pushes higher performance but with more heat and power demand.

If you’re getting good inference times, stable operation, and no signs of overheating or undervoltage, you’re already in good shape.

Using Coral TPU in Real Projects

Vision Applications

The Coral TPU shines with computer vision tasks, especially on the Raspberry Pi 5. Here are real-world applications you can run without melting your CPU:

- Object Detection: Use models like MobileNet SSD to detect people, pets, or products in real time.

- Image Classification: Classify images into categories (birds, tools, faces) with low latency.

- Face Recognition: Detect and identify faces using quantized models.

- Gesture Recognition: Combine with a camera to trigger actions based on hand movement.

- Barcode/QR Scanning: Lightweight models can decode barcodes with high accuracy.

Try this with the Coral detection example:

python3 detect_image.py \

--model ssd_mobilenet_v2_coco_quant_postprocess_edgetpu.tflite \

--input your_image.jpg

Make sure you’ve got a USB webcam or Pi camera set up if you’re doing live video inference.

Edge AI Use Cases

The combo of Coral TPU and Raspberry Pi 5 is perfect for edge deployments where cloud compute isn’t an option. Here are solid starter projects:

- Smart Security Camera – Detects motion, people, or animals.

- Factory Defect Detection – Run real-time image classification on an assembly line.

- Parking Spot Monitor – Tracks vehicle presence using object detection models.

- Environmental Monitoring – Classify plant health from leaf images.

- Voice-Activated Devices – Use Coral TPU for wake-word detection with low latency.

Stick to models that are under 10MB and optimized for TFLite. Anything larger might choke your Pi or exceed the TPU’s capabilities.

FAQ

Q: Can I use the Coral TPU with a Pi 4 or Pi 3 instead of Pi 5?

Yes, but expect slower performance and more USB bottlenecks. The Pi 5 has a much faster USB controller via the RP1 chip.

Q: Why does the TPU disappear when I plug it in?

It’s usually a power issue. The Pi 5’s USB ports can’t always provide enough juice, especially if you’re using other peripherals. Use a powered hub.

Q: What models work with Coral TPU?

Only quantized TFLite models that have been compiled with edgetpu_compiler. You can’t just throw any TensorFlow model at it.

Q: How do I know the TPU is doing the work and not the CPU?

Run the same model with and without the --use_tpu flag and compare inference times. If the TPU is working, it should be 10–15x faster.

Q: Is there a difference between libedgetpu1-std and libedgetpu1-max?

Yes. -max is faster but uses more power and generates more heat. -std is more stable for small boards like the Pi.

References

- Coral USB Accelerator Documentation

- libedgetpu GitHub Repository

- Raspberry Pi 5 Product Page

- TensorFlow Lite Guide

- Raspberry Pi OS Bookworm Info